- #INSTALL SPARK ON WINDOWS JUPYTER NOTEBOOK INSTALL#

- #INSTALL SPARK ON WINDOWS JUPYTER NOTEBOOK CODE#

- #INSTALL SPARK ON WINDOWS JUPYTER NOTEBOOK DOWNLOAD#

On the Jupyterlab browser notebook and tried to load sparkmagic using: loadext sparkmagic.magics This gave an error of module not found.

#INSTALL SPARK ON WINDOWS JUPYTER NOTEBOOK INSTALL#

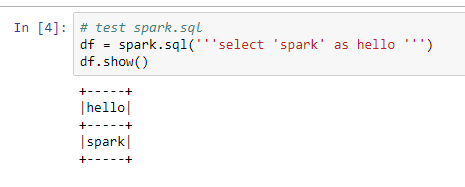

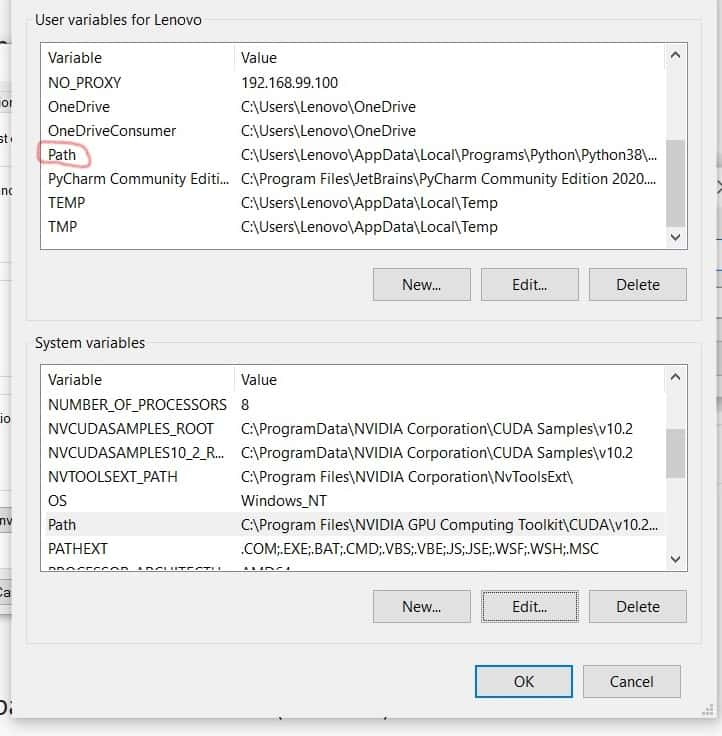

To install Spark Standalone mode, you simply place a compiled version of Spark on each node on the cluster. After successful install of the package on Windows click on the Anaconda Navigator in the Windows Start. I did not have to do most of the steps in this tutorial ). I was a bit surprised I can already run pyspark in command line or use it in Jupyter Notebooks and that it does not need a proper Spark installation (e.g. Spark-shell also creates a Spark context web UI and by default, it can access from Can Pyspark run without Spark? You should see something like this below. Now open the command prompt and type pyspark command to run the PySpark shell. How do I run Pyspark from command prompt? Check if Java version 7 or later is installed on your machine. This would open a jupyter notebook from your browser. Now, from the same Anaconda Prompt, type jupyter notebook and hit enter. This package is necessary to run spark from Jupyter notebook. Open Anaconda prompt and type python -m pip install findspark. Let’s first check if they are already installed or install them and make sure that PySpark can work with these two components. Click on Windows and search Anacoda Prompt. PySpark requires Java version 7 or later and Python version 2.6 or later.

#INSTALL SPARK ON WINDOWS JUPYTER NOTEBOOK DOWNLOAD#

It is free to download and easy to set up.

#INSTALL SPARK ON WINDOWS JUPYTER NOTEBOOK CODE#

Select the Spark release and package type as following and download the.Validate PySpark Installation from pyspark shell.Īlso know, how do I run PySpark locally on Windows?.Download & Install Anaconda Distribution.Set below variables if you want to use PySpark with Jupyter notebook. export SPARK_HOME=/opt/spark export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin export PYSPARK_PYTHON=/usr/bin/python3.īest answer for this question, how do I run a PySpark from Jupyter notebook? Install spark 2.4.3 spark 2.4.4 spark 2.4.7 spark 3.1.2 Windows.sudo apt install default-jdk scala git -y.Moreover, how do I run spark in Jupyter notebook?

Open Anaconda prompt and type “python -m pip install findspark”.

Explore that same data with Pandas, Scikit-Learn, ggplot2 and. Unrecognized alias: '-profile=pyspark ', it will probably have no effect. Big Data Integration Leverage big data tools such as Apache Spark from python, R and Scala. WARNING | You likely want to use `jupyter notebook ` in the future WARNING | Subcommand `ipython notebook ` is deprecated and will be removed in future versions.

0 kommentar(er)

0 kommentar(er)